Guardrails Sequence Diagrams#

This guide provides an overview of the process of invoking guardrails.

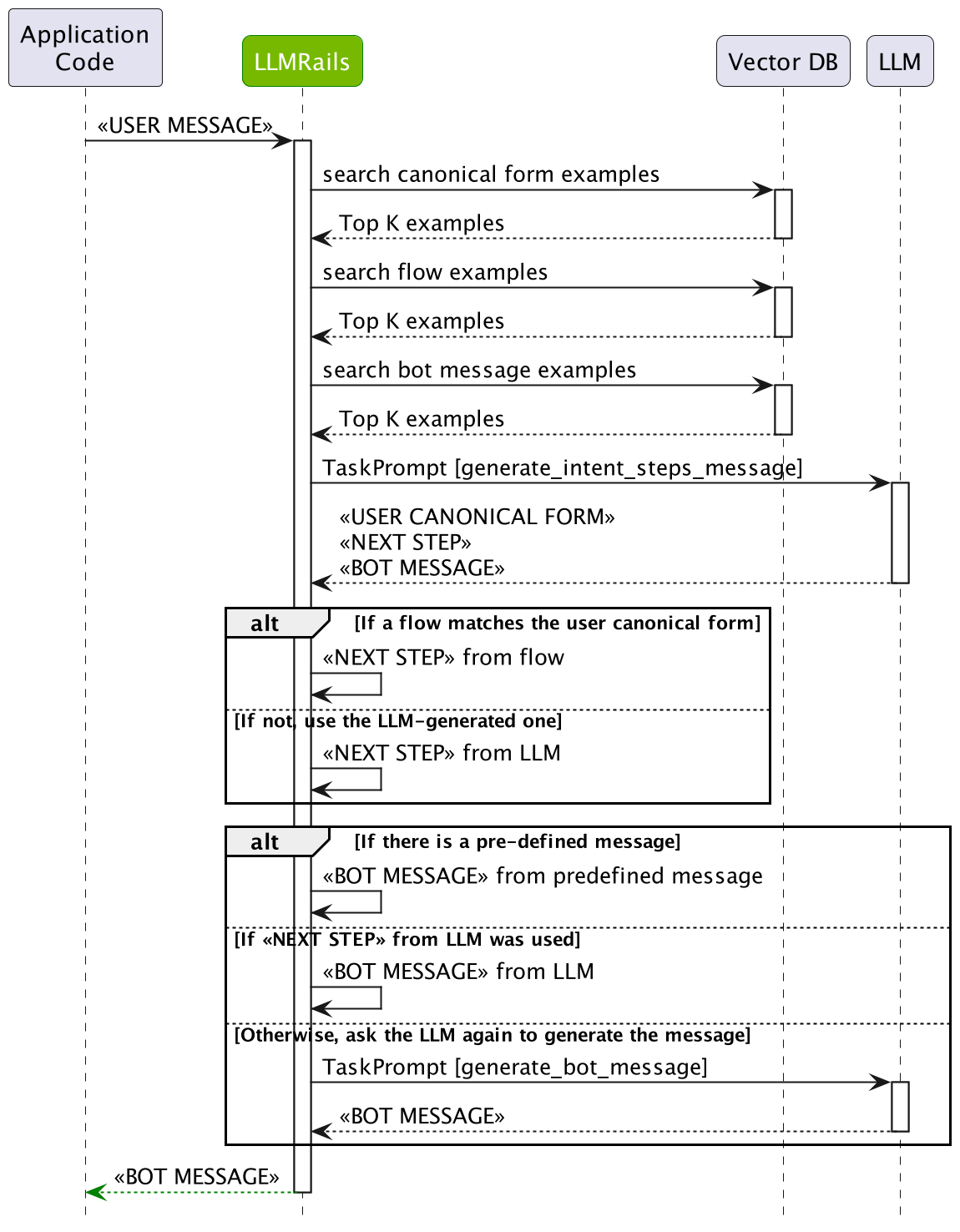

The following diagram depicts the guardrails process in detail:

The guardrails process has multiple stages that a user message goes through:

Input Validation stage: The user input is first processed by the input rails. The input rails decide if the input is allowed, whether it should be altered or rejected.

Dialog stage: If the input is allowed and the configuration contains dialog rails (i.e., at least one user message is defined), then the user message is processed by the dialog flows. This will ultimately result in a bot message.

Output Validation stage: After a bot message is generated by the dialog rails, it is processed by the output rails. The Output rails decide if the output is allowed, whether it should be altered, or rejected.

The Dialog Rails Flow#

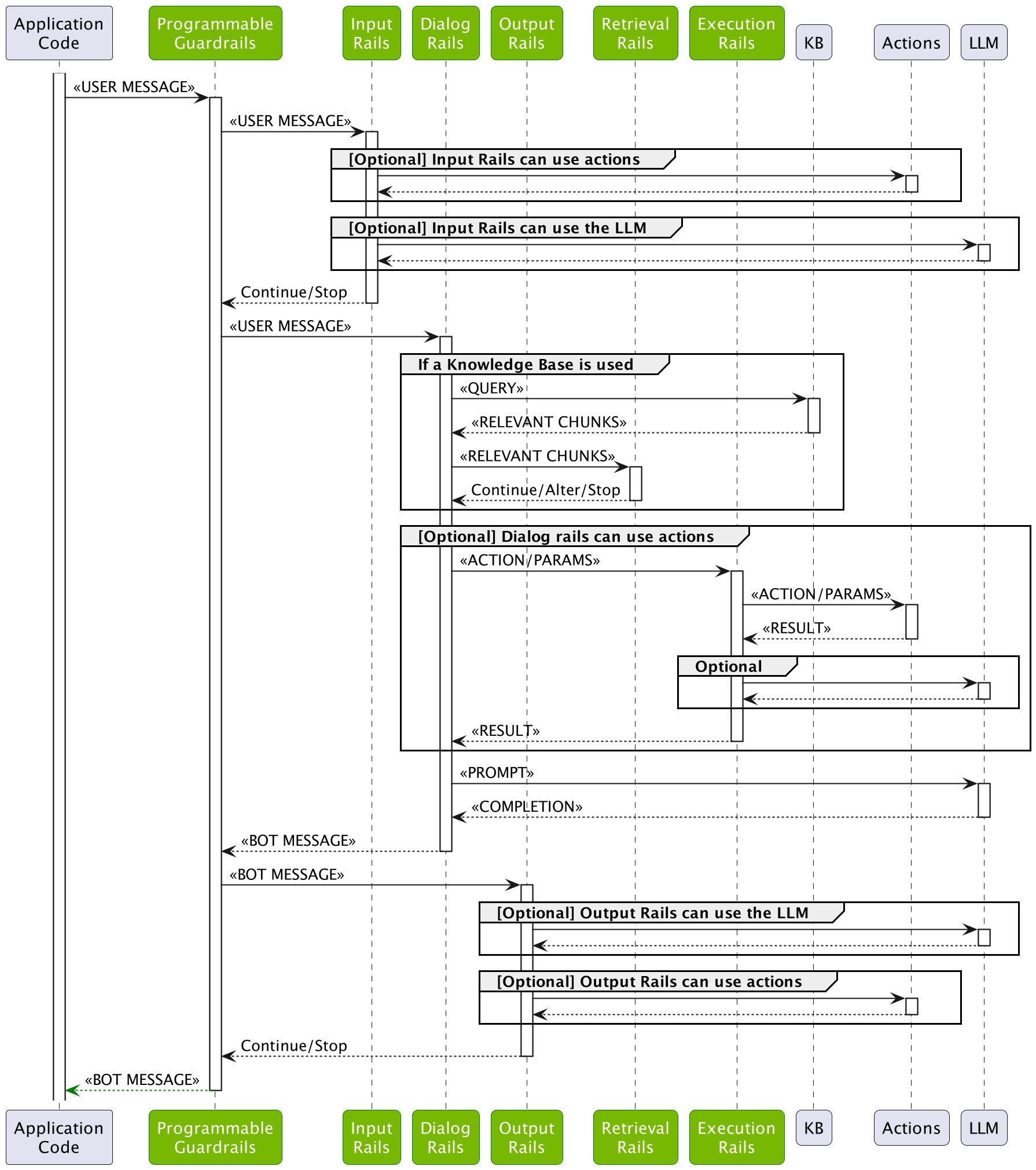

The diagram below depicts the dialog rails flow in detail:

The dialog rails flow has multiple stages that a user message goes through:

User Intent Generation: First, the user message has to be interpreted by computing the canonical form (a.k.a. user intent). This is done by searching the most similar examples from the defined user messages, and then asking LLM to generate the current canonical form.

Next Step Prediction: After the canonical form for the user message is computed, the next step needs to be predicted. If there is a Colang flow that matches the canonical form, then the flow will be used to decide. If not, the LLM will be asked to generate the next step using the most similar examples from the defined flows.

Bot Message Generation: Ultimately, a bot message needs to be generated based on a canonical form. If a pre-defined message exists, the message will be used. If not, the LLM will be asked to generate the bot message using the most similar examples.

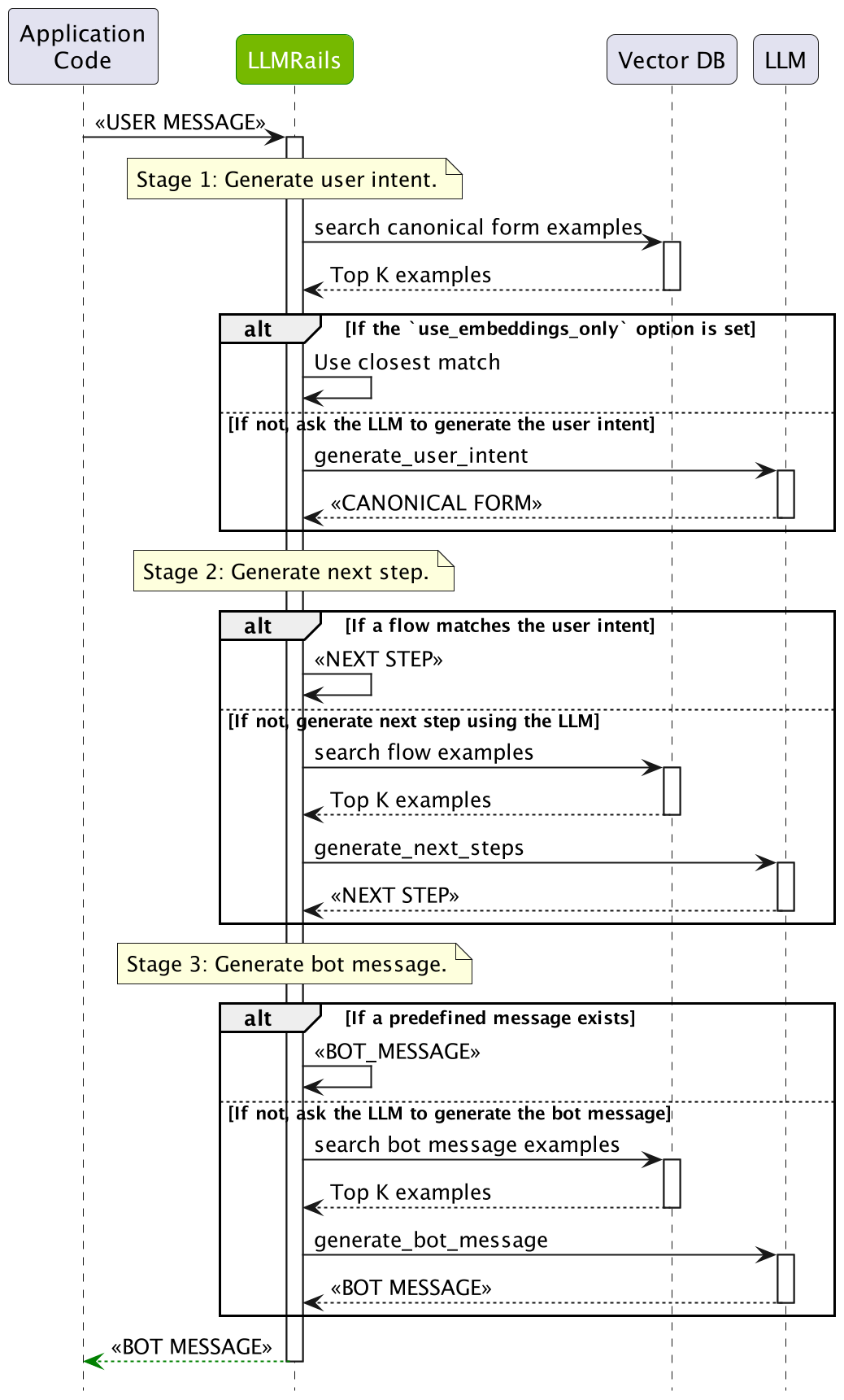

Single LLM Call#

When the single_llm_call.enabled is set to True, the dialog rails flow will be simplified to a single LLM call that predicts all the steps at once. The diagram below depicts the simplified dialog rails flow: